So all of a sudden AI is everywhere people who weren't quite sure what it was or playing with it on their phones is that good or bad yeah so I've been um thinking about AI for a long time since I was in college really um it was one of the things that the sort of four or five things I thought would really uh affect the future.

Dramatically it is fundamentally profound in that the the smartest creatures as far as we know on this Earth are humans um is our defining characteristic yes we obviously uh weaker than say chimpanzees are less agile um but real smarter so.

Now what happens when something vastly smarter than the smartest person comes along in Silicon foam it's very difficult to predict what will happen in that circumstance it's called The Singularity it's you know it's a singularity like a black hole because you don't know what happens after that it's hard to predict.

So I think we should be cautious with AI and we should I think there should be some government oversight because it affects the it's a danger to the public and so when you when you have things that are endangered to the public uh you know like let's say um.

So Food Food and Drugs That's why we have the Food and Drug Administration right and the Federal Aviation Administration uh the FCC uh we have we have these agencies to oversee things that uh affect the public where they could be public harm and you don't want companies cutting Corners uh on safety.

Um and then having people suffer as a result so that's why I've actually for a long time been a strong advocate of uh AI regulation um something I think regulation is uh you know it's not fun to be regulated it's a sort of somewhat of a I saw an audience to be regulated I have.

A lot of experience with regular regulated Industries because obviously Automotive is highly regulated you could fill this room with all the regulations that are required for a production car just in the United States and then there's a whole different set of regulations in Europe and China and the rest of the world so.

Very familiar with being overseen by a lot of regulators and the same thing is true with rockets you can't just willy-nilly you know shoot Rock itself or not big ones anyway because the FAA is overseas that um and then even to get a launch license you there are probably half a dozen or more federal.

Agencies that need to approve it uh plus state agencies so it's I'm I've been through so many regulatory uh situations it's insane and and but you know sometimes I I people think I'm some sort of like regulatory Maverick that sort of defies Regulators on a regular basis but this is actually not the case uh so uh in you.

Know once in a blue moon rarely I will disagree with Regulators but the vast majority of the time uh my companies agree with the regulations and comply us anyway so I think I think we should take this seriously and and we should have um a regulatory agency I think it needs to start with.

Um a group that initially seeks uh insight into AI uh then solicits opinion from industry and then has proposed rulemaking and then those rules you know uh we'll probably hopefully gradually be accepted by the major players in in Ai and um and I think we'll have a better chance of.

Um Advanced AI being beneficial to humanity in that circumstance but all regulations start with a perceived danger and planes fall out of the sky or food causes botulism yes I don't think the average person playing with AI on his iPhone perceives any danger can you just roughly explain what you think the dangers might be.

Yeah so the the the the danger really AI is um perhaps uh more dangerous than say mismanaged uh aircraft design or production maintenance or or bad car production in the sense that it is it has the potential however small one.

Make regard that probability but it is non-trivial and has the potential of civilizational Destruction there's movies like Terminator but it wouldn't quite happen like Terminator um because the the intelligence would be in the data centers right the robot's just the end effector but I think perhaps what you may be.

Alluding to here is that um regulations are really only pointed to effect after something terrible has happened that's correct if that's the case for AI and we're only putting regulations after something terrible has happened it may be too late to actually put the regulations in place the AI may.

Be in control at that point you think that's real it is it is conceivable that AI could take control and reach a point where you couldn't turn it off and it would be making the decisions for people yeah absolutely absolutely no it's that's that's definitely the way things are headed.

Uh for sure uh I mean um things like like say uh chat EVT which is based on jpd4 from openai which is the company that I played a a critical role in in creating unfortunately back when it was a non-profit yes.

Um I mean the t he reason uh openai exists at all is that um Larry Page and I used to be close friends and I would stay at his house in Palo Alto and I would talk to him later tonight about uh AI safety and at least my perception was that Larry was not.

Taking uh AI safety uh seriously enough um and um what did he say about it he really seemed to be um sort of digital super intelligence basically digital God if you will uh as soon as possible um he wanted that yes he's made many public statements over the years uh that the whole goal of.

Google is uh what's called AGI artificial general intelligence or artificial super intelligence you know and I agree with him that the there's great potential for good um but there's also potential for bad and so if if you've got some um radical new technology you want to try to take a set of actions that.

Maximize probably it will do good a minimize probably will do bad things yes it can't just be Health leather let's just go you know barreling forward and you know hope for the best and then at one point uh I said well what about you know we're going to make sure humanity is okay here um.

And and and um uh and then he called me a speciesist that term yes and there were Witnesses the other I wasn't the only one there when you called me a speciesist and so I was like okay that's it uh I've yes I'm a.

Speciesist okay you got me what are you yeah I'm fully auspicious um busted um so um that was the last rule at the time uh Google uh had a quite Deep Mind and so Google deepmind together had about three quarters of all the AI talents in the world they obviously had a trans amount.

Of money and more computers than anyone else so I'm like okay we're about unipolar world here where there's just one one company that it has close to Monopoly on AI talent and uh and computers like so scaled Computing and a person who's in in charge doesn't seem to care about safety this is not good so.

What's the the furthest thing from Google would be like a non-profit that is fully open because Google was closed for profit so that's why the open and open AI refers to open source uh you know transparency so people know what's going on yes and that it we don't want to have like a I mean well I'm normally in favor of for-profit we don't want.

This to be sort of a profit maximizing a demon from hell that's right that just never stops right so that's how I open air was so you want specious incentives here incentives that yes I think we went to pro-human yeah this makes the future good for the humans yes yes because we're humans so can you just put it I keep pressing it but just just for.

People who haven't thought this through and aren't familiar with it and the cool parts of of artificial intelligence are so obvious you know write your college paper for you write a limerick about yourself like there's a lot there that's fun and useful can you be more precise about what's.

Potentially dangerous and scary like what could it do what specifically are you worried about okay going with old sayings the pen is mightier than the sword um so if you have a super intelligent AI that is capable of writing uh incredibly well and in a way that is very influential.

Um you know convincing and then and as and is constantly figuring out what is more what is more and what is more convincing to people over time and then enter social media for example Twitter uh but also Facebook and others you know um and and potentially manipulates public opinion in a way that is very bad.

Um how would we even know how do we even know so to sum up in the words of Elon Musk for all human history human beings have been the smartest beings on the planet now human beings have created something that is far smarter than they are and the consequences of that are impossible.

To predict and the people who created it don't care in fact as he put it Google founder Larry Page a former friend of his is looking to build a quote digital God and believes that anybody who's worried about that is a speciesist in other words is looking out for human beings first Elon Musk responded as a human being it's okay to look out for.

Human beings first and then at the end he said the real problem with AI is not simply that it will jump the boundaries and become autonomous and you can't turn it off in the short term the problem with AI is that it might control your brain through words and this is the application that we need.

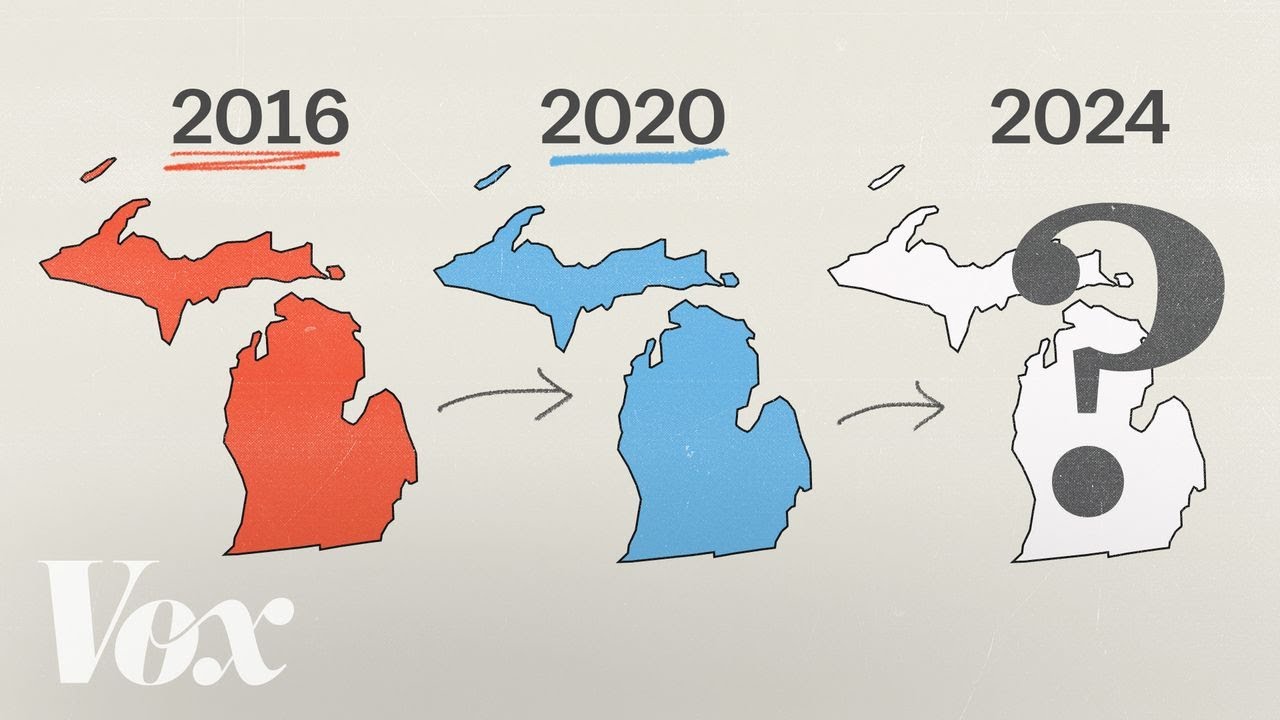

To worry about now particularly going into the next presidential election the Democratic party as usual was ahead of the curve on this they've been thinking about how to harness AI for political power more on that next subscribe to the Fox News YouTube channel to catch our nightly opens stories that are changing the world and.

Changing your life from Tucker Carlson tonight

A.I IS AN ARTIFICIAL OPINIONCONTROLLED BY THOSE WHO HAVETHAT OPINION LIKE YOUTUBEIF I SUPPORT JULIAN ASSANGEYOUTUBE WILL DELETE MY OPINIONTHEN THREATEN ME IN THE PRIVACYOF MY OWN HOME STATING HAVE I…….G O T IT…. AGGGRRRRLIKE A DOG DISGRACEFUL

Musk is a LIAR. He feeds you all bs about AI when he’s the one Producing It.I Am The Helper(John 15:26 ESV)

The FDA became a gorgeous contaminated example right here!