– Do you notice anything off in this animated videoof a cooking grandmother? – Notice the magic spoonthat just randomly appears, and then randomly disappears. – Flaws like that can help viewers spot AIgenerated videos from OpenAI. It's new Text-to-video tool Sora created all of these clipsfrom scenic landscapes, to bedazzled zoo animals withouta major production studio.

Or team of animators. – When you think about some ofthe most recent Pixar movies, when you think about the amountof energy that they go into to literally build every detail so that their hair moves inthe right way and things. This stuff now, is a computer doingwithout a single person. – Users will beable to type in a prompt and their words arebrought to life, like this.

But such innovation has raised concerns about the spread of misinformation. So being able to detect AI in video has become even more important. We'll share some tips onhow to spot AI videos, and look at how thistechnology could be abused. – The the funny thing about this, if you really watch the runner, aside from the factthey're going backwards.

And moving in multiple directions, if you watch how they're running, you'll see that their physical body is not matching the way arunner would run, right? In other words, you'll seetheir arms doing a double take, and that means their balance wouldn't work and they wouldn't be able to function. These are things that the AI still doesn't understand about the physical world.

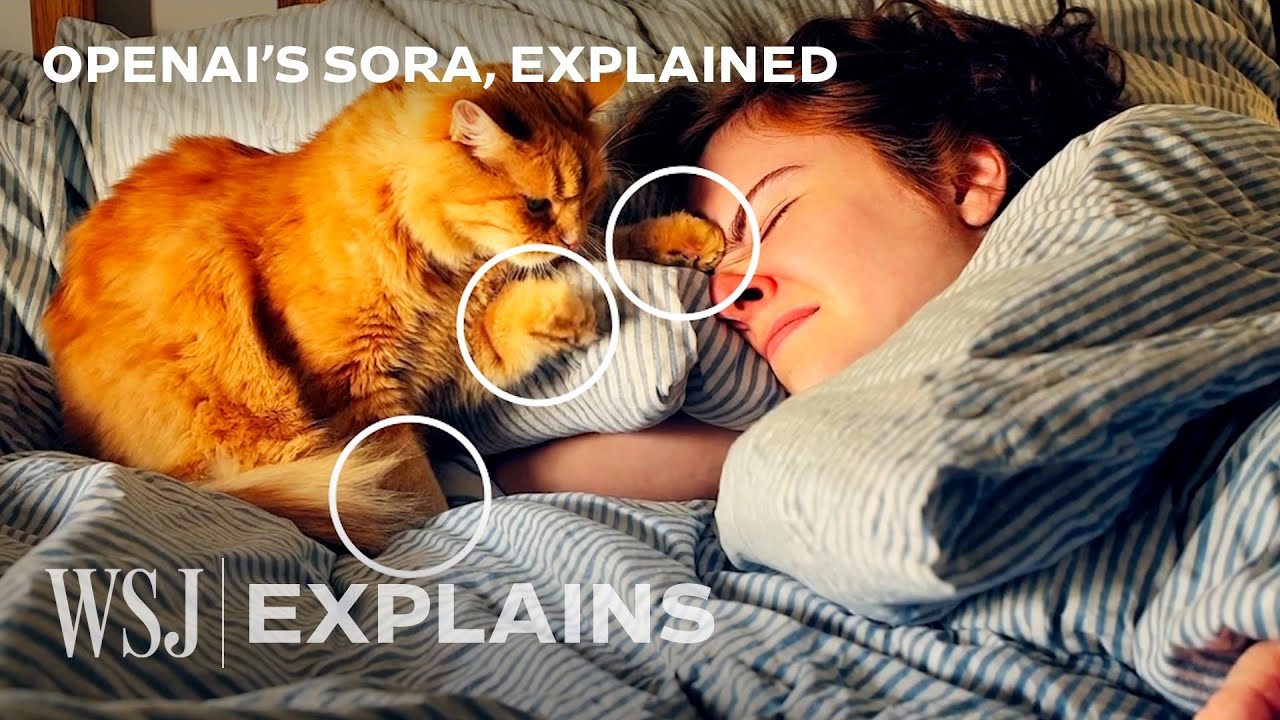

– That's Stephen Messer, the co-founder of an AI salescompany called Collectivei, he's worked in the AI industryfor more than a decade, and now he's going to help show us how to spot AI generated videos. Let's consider this clip ofa cat waking up its owner. – Now, you look at this catvideo, it is pretty amazing. One, really cute cat, but you probably notice thatsome of the physics, again,.

Are off right? Where the user switchesover and flips over, the way the the sheet flipsover is a little bit weird. But if you look really closely and if you use your skill, you probably are noticing something's a littlebit weird about the cat. There's two paws already out, and from the middle of the cat,.

A third paw magically appears. These are examples of some of the places where things just are a little bit off. Again, always coming down tophysics of the real world. – And when theplatform simulates people, sometimes things may not feel quite right, like with the cookinggrandmother from earlier. – So our senses are amazingat spotting weird things, things that just don't feel right.

If you look at the hands andthe way our movements work, you'll notice that that is not how humans move their fingers or their body. – And then, we have hyper realisticlandscape shots like this one. At first glance, this mightlook like drone footage of the Amalfi Coast. – Look at the waves. The waves are going out, as opposed to in,.

Again, a physics problem that exists. – And that's not all. – The same thing with the stairwell. Look, you can see stairwellsthat sort of lead to nothing, stairwells that are all over the place because it was asked for, it's just throwing stairwells, not in a way that physics would demand for us to actually use them.

And so it's probablyfound in lots of videos, different staircases, and itshoved them into the video. – Sora can simulatehistorical footage too, down to the grainy textureof an old film camera, but when you look closer… – You're gonna start tonotice that there are houses of all differentgenerations that are there. I would also point out that if you notice in everywestern video you've ever seen,.

They don't have streets whereone horse goes one direction and horses then come the other direction, which is what you'll see hereas if it's like a modern road. – And here, sadly, one of the horses meltsinto the ground mid shot. OpenAI acknowledges thetool has some spatial issues like in this scene set in Tokyo. – If you watch the cars going in the opposite direction,.

When they go through thetrees, they disappear. – Animated scenescan make it more difficult to tell if a video was created by AI. – Look animations, youdon't expect to be perfect. In fact, part of the funsometimes that they do things that are physically impossible to do. – Here, the AItool Masters 3D geometry, but there are still afew things that feel off. – His eyes do not reflectthe people in front of them,.

Which is actually somethingyou would expect to see. Fingers move in strange ways, but again, these are characters. Wiley Coyote runs into, you know, a wall and runs through a truck. So these are probably the areas where you're gonna see a lotof usage in the beginning. – In anotherclip of a paper coral reef, the Generative AI shows more of a flare.

For storytelling and Worldbuilding. – Here what you're seeingis the level of creativity that humanity can have whenworking with tools like this. You can come up with almost any idea that nobody would'veever thought of before, and have such a high quality rendering. I could see this being a movie that nobody ever thought about before. – Sora learned to create.

These types of animated characters from the data it was trained on. In this case, licensed andopen source video material. But right now, a number of lawsuits against OpenAI hinge on the question of whether publicly available copyrighted content is fair game for AI training. – I think mostly what you seeis a content created world.

Seeing a new entity rise up similar to the way Google did early on, where they're making money off the backs of other people's work. And I think that's naturallygonna lead itself to lawsuits. – Even though Sora hasn't been publicly released yet, some industry expertsare already concerned about its potential for misuse.

– Tools like this will be usedfor powerful misinformation. There are bad actors that will look to seek totake advantage of the fact that a lot of people cannotspot these differences. – OpenAIsays it's taking actions to get ready for the 2024presidential election, including prohibitingthe use of its platforms for political campaigning. It's also developing tools.

That can tell when a videowas generated by Sora. There are privacy concerns too. – If it's been trained onvideo from the internet, that means a lot of people who've been in videos on the internet, whether you uploaded yourfamily holiday, et cetera, could it in theory be usedas these things progress. – But when itcomes to actual filmmaking, experts say it will be a long time.

Before Text-to-video threatens medium. Right now, the platformcan only create clips that are up to a minute long, because the AI model won't respond to similar prompts in the exact same way. You couldn't combine 60different one minute AI clips into a coherent movie. – So all of these models tendto what they call hallucinate. They sort of go off the beaten path.

So the longer the video is, the more likely it is to fall apart. – But the toolcould still transform other short form contentcreator platforms. – If you're someone who's up and coming, and you think to yourself,I don't have the cash, I can't get the skill, Idon't have these people, this becomes unbelievabledemocratizing to the world and their ability tobring things to market.

– And video creators have something else to get excited about. OpenAI says Sora is alsoable to generate videos from a single image. This would seemingly allow people to draw what's on theirmind, and animate it to life. – So when you think about that, you realize we're stillat the very early stages of pretty powerful changes inthe way videos are created.

(soft music)

😮 I'll be help in 5 months to recount at how irrelevant this is you will merely no longer be in a position to express the distinction😮 truly I'm nearly sure in the relative future of us will bewitch AI video over proper video and walk that's the proper one staunch😮

Turned into this script written by chat GPT is the proper quiz 🤨

I’m going to spend this to unfold so significant disinformation it’s crazy